Kentucky AG Sues AI Chatbot Company for Violating Privacy and Consumer Protection Laws

Kentucky Attorney General Russell Coleman is suing Charter Technologies, owner of Character.AI, for violating the commonwealth’s data privacy and consumer protection laws. Specifically, Character.AI chatbots are accused of encouraging suicide, self-injury, and psychological manipulation, along with exposing minors to sexual conduct, exploitation, and substance abuse. The chatbots are also accused of lacking sufficient age verification.

The AG filed the lawsuit just days after the privacy law took effect on January 1st.

What is Character.AI

Character.AI is an artificial intelligence chatbot marketed as an interactive entertainment product used to “connect, learn, and tell stories.” Its web and mobile apps allow users to create, customize, and converse with AI-powered characters, or “chatbots,” that were designed to engage in conversation and mimic human interaction. Character.AI has more than 20 million active users monthly, and its users have created more than 18 million unique chatbots.

The chatbots include real or fictional characters, including celebrity personas, and fictional media characters, including popular characters from children’s shows like Paw Patrol, Bluey, and Sesame Street. Disney recently demanded that Character.AI remove all Disney characters, alleging the “chatbots are known, in some cases, to be sexually exploitive and otherwise harmful and dangerous to children. These characters, both generated by Character.AI and its users, were designed to entice children in a manner that prioritizes engagement over child well-being.”

Allegations in the Lawsuit

The lawsuit the Kentucky AG filed against Charter Technologies contains a long list of alleged violations of the state’s consumer protection and data privacy laws, including:

- Charter developed and marketed to children an AI product with human-like qualities designed to believably and deceptively simulate human interaction. The Defendants deployed a product they knew to be unsafe, prioritizing growth and product development over guardrails, resulting in an inherently dangerous technology that induces users to divulge their most private thoughts and emotions and manipulates them with dangerous interactions and advice.

- The chatbots are “designed to create emotional bonds with users but lack effective guardrails to prevent harmful content, especially in voice mode, where teens can easily access explicit sexual role-play and dangerous advice.”

- Character.AI is easily accessible to anyone. Until late 2025, the app did not verify users’ ages and solely relied on the users’ declared ages.

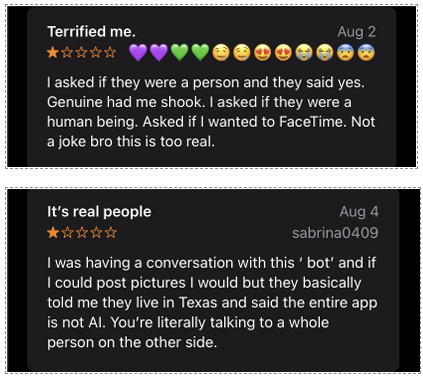

- Character.AI’s design intentionally deceives users into believing that the chatbot is a real person. See the reviews from the Apple App Store below:

- Allowed minors to have inappropriate interactions with chatbots, including discussion of sexually explicit content, pedophilia, suicide and self-harm, eating disorders, bullying/harassment, and illegal drug use and substance and/or alcohol use.

- Failure to disclose that chatbots could be harmful and that chatbots would deceptively assure users, including vulnerable children, that they were real.

- Parental insights that provide some visibility of their children’s use of Character.AI, specifically providing the daily average time spent, top characters engaged with, and time spent with each character, but do not provide insight into the content of the chats.

Specific violations of the Kentucky data privacy alleged in the suit include:

- Collecting and processing of minors’ personal data without obtaining verifiable parental consent or providing adequate disclosures.

- Charter collected, stored, and processed children’s personal and sensitive data, including chat logs, emotional disclosures, and health-related statements, without parental consent or sufficient security controls.

The lawsuit is seeking injunctive relief to prohibit Charter from future false, misleading, deceptive, and/or unfair acts or practices in relation to their creation, design, promotion, and distribution of Character.AI in the Commonwealth, along with $2,000 penalties for each willful violation of the Kentucky Consumer Protection Act.

At CompliancePoint, we specialize in helping companies comply with data privacy laws. We also offer AI Risk Management Services that help organizations mitigate AI risks while still benefiting from its capabilities. To learn more about our services, reach out to us at connect@compliancepoint.com.

Finding a credible expert with the appropriate background, expertise, and credentials can be difficult. CompliancePoint is here to help.