The age of AI is here. While companies can use AI tools to automate tasks and improve productivity, the technology presents new risks, including:

- Costly fines for violating laws like the EU AI Act and state laws in the US

- Vulnerabilities in AI systems that lead to data security risks

- Privacy risks from an AI system’s use of personal information

- Risks to an organization from flawed AI models, including hallucinations and biased data

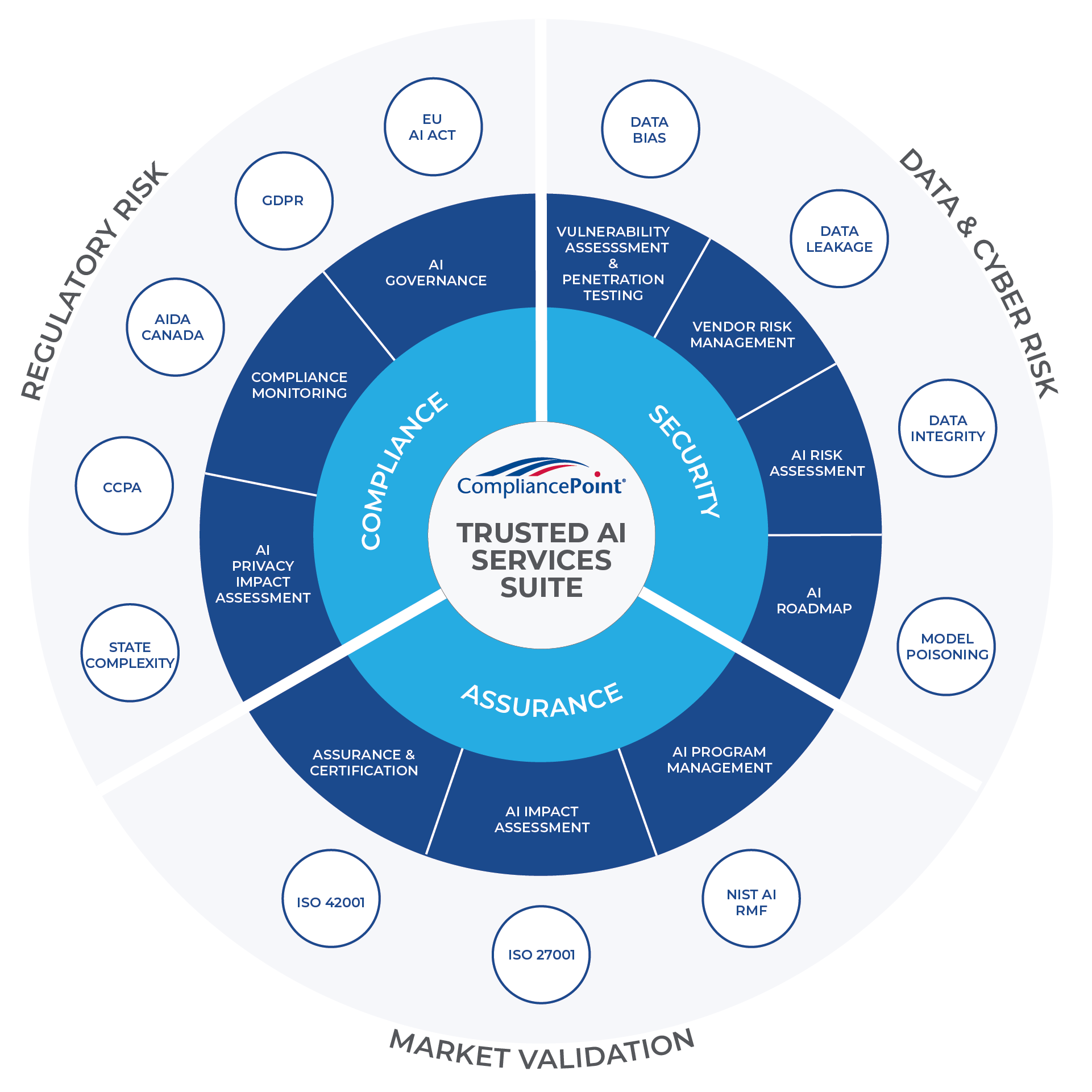

To account for all the risks that come with AI, businesses need a comprehensive risk management program. At CompliancePoint, we have combined our cybersecurity, data privacy, and regulatory compliance expertise to develop AI Risk Management Services that allow organizations to mitigate those AI risks while still leveraging its power to streamline business operations.

Our Approach to AI Risk Management

Identify

Assess your existing AI systems to identify risks, such as algorithmic bias and security vulnerabilities. Work with key stakeholders to establish a roadmap.

Remediate

Develop and implement strategies and controls to reduce or eliminate the identified risks in the AI systems.

Monitor

Continuously observe AI systems in operation to detect emerging risks and ensure adherence to policies and regulations.

Speak with an Expert

Our Focus

To mitigate the risks that can arise from third-party security gaps, CompliancePoint focuses on performing the following services for our customers. Our TPRM services can be customized to fit your unique vendor and supply chain network.

AI Roadmap

We work with you to identify business opportunities for the application of AI, establish governance over these initiatives, and mitigate risk throughout the implementation process.

AI Impact Assessments

CompliancePoint uses a systematic process to evaluate the potential effects, both positive and negative, of an AI system's development and deployment on an organization, its stakeholders, and society.

AI Governance

The development of principles, policies, processes, and controls designed to guide the responsible development, deployment, and management of AI systems within an organization.

Vulnerability Assessment

An AI vulnerability assessment will identify, analyze, and prioritize potential weaknesses or security risks within an AI system that could be exploited by malicious actors or lead to unintended consequences.

Penetration Testing

We can conduct controlled and authorized cyberattack simulations to evaluate the security and resilience of an AI system by identifying exploitable vulnerabilities.

AI Privacy Impact Assessments

An AI Privacy Impact Assessment (AIPIA) will identify, assess, and mitigate privacy risks associated with the development, deployment, and operation of an AI system.

Assurance and Certification

We will assess your AI systems to verify adherence and provide a compliance roadmap for regulations and frameworks such as the EU AI Act or ISO 42001.

Compliance Monitoring

Continuous monitoring, including audits, to ensure AI systems adhere to relevant laws, regulations, standards, and internal policies throughout their lifecycle.

Vendor Monitoring

CompliancePoint can oversee and evaluate the performance, compliance, and risk management of third-party vendors providing AI technologies or services.

Our Focus

To mitigate the risks that can arise from third-party security gaps, CompliancePoint focuses on performing the following services for our customers. Our TPRM services can be customized to fit your unique vendor and supply chain network.

AI Roadmap

We work with you to identify business opportunities for the application of AI, establish governance over these initiatives, and mitigate risk throughout the implementation process.

AI Impact Assessments

CompliancePoint uses a systematic process to evaluate the potential effects, both positive and negative, of an AI system's development and deployment on an organization, its stakeholders, and society.

AI Governance

The development of principles, policies, processes, and controls designed to guide the responsible development, deployment, and management of AI systems within an organization.

Vulnerability Assessment

An AI vulnerability assessment will identify, analyze, and prioritize potential weaknesses or security risks within an AI system that could be exploited by malicious actors or lead to unintended consequences.

Penetration Testing

We can conduct controlled and authorized cyberattack simulations to evaluate the security and resilience of an AI system by identifying exploitable vulnerabilities.

AI Privacy Impact Assessments

An AI Privacy Impact Assessment (AIPIA) will identify, assess, and mitigate privacy risks associated with the development, deployment, and operation of an AI system.

Assurance and Certification

We will assess your AI systems to verify adherence and provide a compliance roadmap for regulations and frameworks such as the EU AI Act or ISO 42001.

Compliance Monitoring

Continuous monitoring, including audits, to ensure AI systems adhere to relevant laws, regulations, standards, and internal policies throughout their lifecycle.

Vendor Monitoring

CompliancePoint can oversee and evaluate the performance, compliance, and risk management of third-party vendors providing AI technologies or services.

AI Regulations and Frameworks

Our AI Risk Management services can help you align your AI systems with the following frameworks:

- NIST AI RMF was developed to help organizations better identify and manage the risks associated with AI technology. It is a voluntary, high-level framework that is industry-agnostic and applicable to various types of AI systems.

- ISO 42001 is the first certifiable standard to provide requirements for establishing, implementing, maintaining, and continually improving an AI Management System (AIMS).

- The EU AI Act is the world's first comprehensive law regulating the use of artificial intelligence (AI) across the European Union. Violations can result in fines up to €35M (approximately $41M) or 7% of global annual revenue (whichever is higher).

- The GDPR is a comprehensive privacy regulation intended to strengthen data protection for people in EU countries. The law places strict data privacy rules on organizations for personal data used in AI systems, focusing on principles like transparency, consent, and accountability.

Get started with AI Risk Management

10 Billion+

Records Audited

150+

Cases as an

Expert Witness

2,500+

Companies Served

+96

Net Promoter Score - Our Customers Love Us!